Crowd Prediction

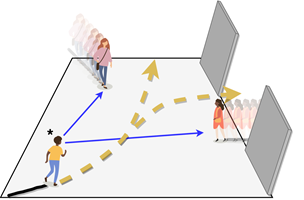

Illustration of Multi-modal trajectory prediction problem

For a autonomous or semi-autonomous robot to navigate in a crowded environment safely, having a prediction of future behaviors of surrounding agents is unavoidable. Hence the Crowd Prediction module is in charge of estimating plausible future locations of these agents by considering possible interactions with each other and with environment as well. The output of the system is a set of predicted trajectories and could be considered in the navigation process in certain situations.

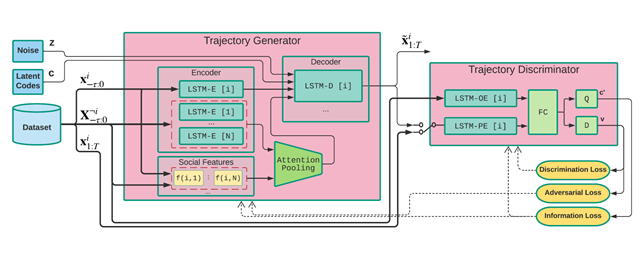

In CrowdBot we have designed and implemented a data-driven prediction system that can learns from the observed trajectories in the environment to improve the prediction. This system that is called “Social Ways” uses generative adversarial networks to map a given input of N observed trajectories corresponding to N detected agents into K various sets of N predicted trajectories.

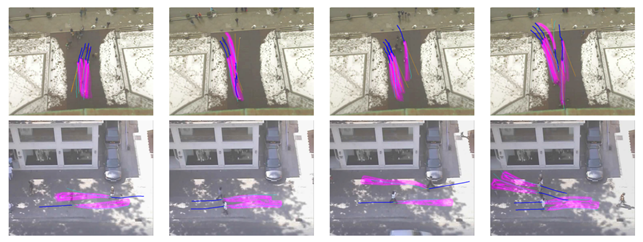

Few examples of predicted trajectories on benchmark human trajectory datasets

We use recurrent neural networks to encode the agents’ trajectories. In parallel 3 simple interaction features between pairs of agents are computed, including euclidean distance, bearing angle and the distance of closest approach (i.e. the smallest distance two agents would reach in the future if both maintain their current velocity). The interaction features pass through a attention-pooling layer, where they are assigned weight values between zero and one, that indicate how much each interaction is impactful in prediction of a certain agent.

Block diagram of crowd prediction network

Crowd Simulation

Our technologies needs tools to:

- Evaluate their performances

- Test their performances

- Benchmark their performances

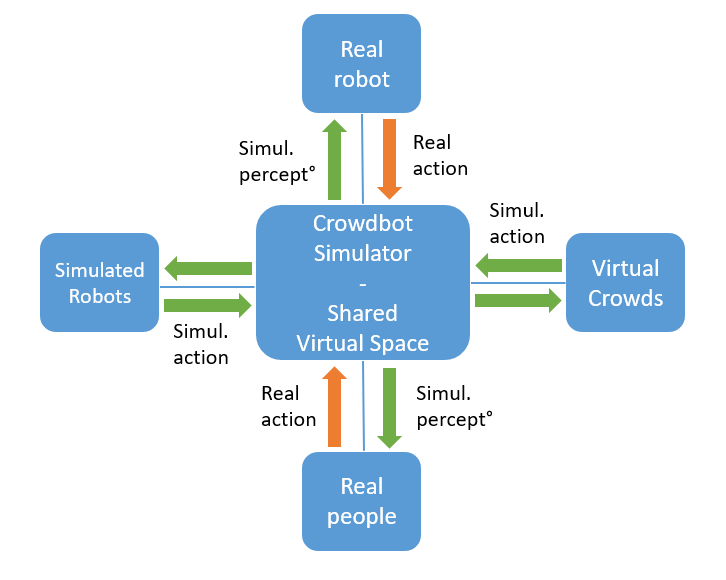

Such tests require full control over the environment which is possible using simulation. However, implementation of a simulated crowd is not an easy task as the realism of such crowds is quickly questioned. Thus, we designed a simulation tool that uses virtual reality to immerse both (real) robots and humans in a virtual world, as described in the following figure.

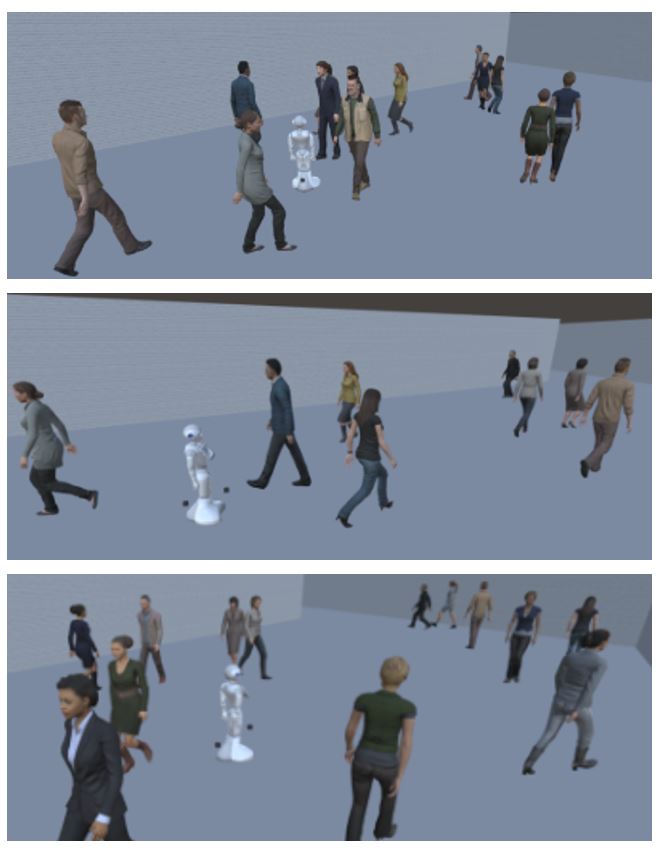

A realistic simulated environment

Pepper moving around in a crowd

Our simulation tool provides the possibility to simulate a crowd of realistic characters moving in realistic environments shared with our robots. To do so, we use various crowd simulation techniques (RVO, Vision Based, PowerLaw, etc.) and high quality 3D models.

Our 3D engine, Unity, is coupled with ROS (thanks to ROS#) and implement sensors simulation (LiDAR, RGB-D, ultrasound, etc.) and robot control. Using its physical engine, we can simulate a proper robot motion and report on collisions with the crowd.

The connection with ROS allows us to use our technologies and tools as if the simulated robot was a real robot.

The simulator will be available to the public to test their very own navigation testing and evaluate them according to metrics. This version of the simulator, the CrowdBot Challenge will provide to the community a tool to benchmark the performance of different navigation techniques in scenarios we fixed.

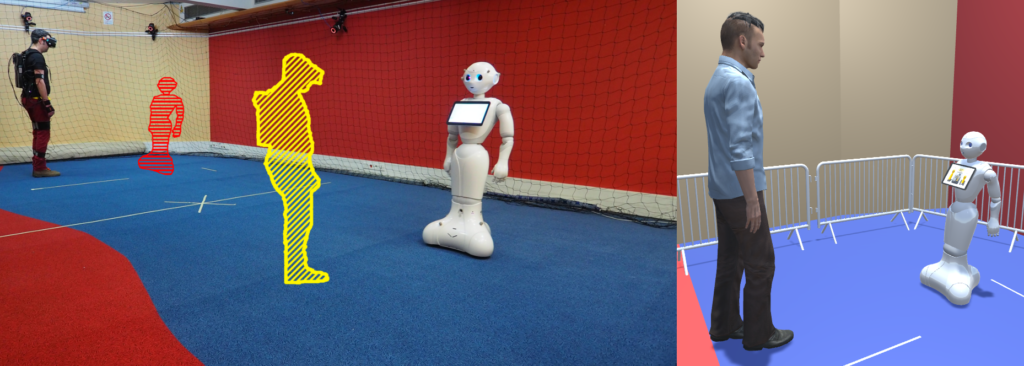

How Virtual Reality can help robots navigating crowds?

It is crucial to evaluate the capacity of robots to move safely in close proximity with humans. But testing such capacities in real conditions may raise risks of collisions between the robot and the experimenters or the participants of tests. To avoid such risk, CrowdBot is exploring the use of Virtual Reality to perform such tests. The principle is illustrated above: whilst the robot and the human remain physically separated, both the robot and the human perceive one another as if they were face to face, as illustrated on the right image.

Such tool allows us to ensure safety for the participant while preserving the realistic human robot interaction we have in real conditions.