Active SLAM

Removing laser returns from dynamic obstacles

For a robot to plan a path from one point to another, it needs a map of the environment. Simultaneous localization and mapping (SLAM) allows a robot to build a map online and, at the same time, localize itself within that map. SLAM accounts for uncertainties and errors that arise from imperfect sensing and actuation. However, in crowded environments, additional challenges arise. Dynamic obstacles in the sensor field of view can be wrongly incorporated into the map. They can also cause the robot to become lost if there are too many occlusions.

In CROWDBOT, we take an active SLAM approach which balances the robot’s need to maintain good localization within parts of the map that it has already seen (i.e. areas with fewer dynamic obstacles), and the need to explore new areas to complete the map. We also conduct a pre-filtering step over the incoming sensor scans, in our case 360° 2D LIDAR, to remove points that are deemed to be returns from dynamic obstacles rather than from the static environment. This way, the robot builds clean, coherent maps that can be used for navigation.

LIDAR Localization

When operating in a dense crowd, the reality is that a robot may often be unable to sense the static environment around it due to severe occlusions from the crowd. This raises the chances for experiencing what’s known as « the kidnapped robot scenario ». Unfortunately, existing localization solutions have been shown to perform very poorly under these circumstances, i.e. when prior pose information is unavailable.

Our goal for CROWDBOT localization is to achieve a prior-free solution that is fast to generate an accurate estimate of the robot pose at initialization and is also robust to partial or temporary sensor occlusions. Thus, our proposed approach is based on map-matching, as opposed to scan-matching. Furthermore, we use a branch-and-bound search to improve the computational complexity of the matching routine and enable real-time operation.

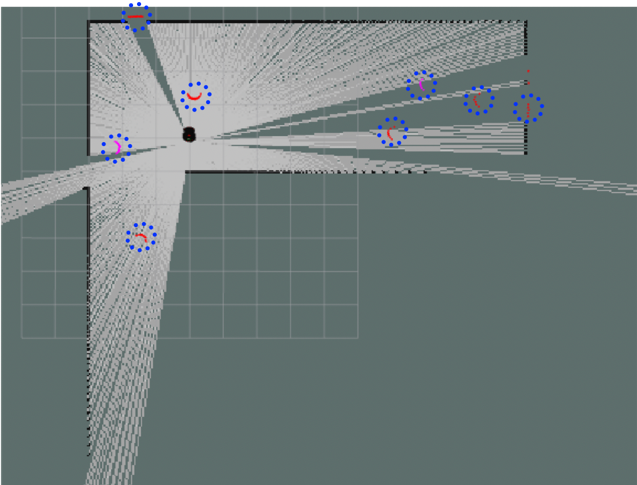

Online localization performance of our map matcher

Visual-inertial Localization

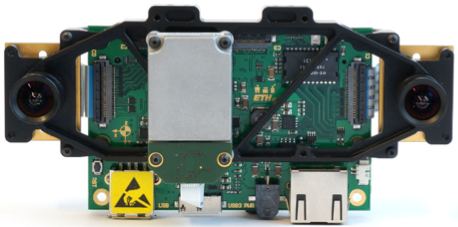

Skybotix VI-sensor for integration of stereo vision and inertial measurement data

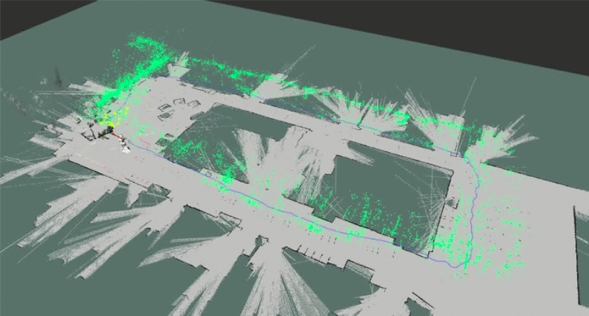

In CROWDBOT, we are also investigating localization solutions that make use of visual features on the ceiling above the robot. Due to the severity of lateral occlusion from human crowds, this may be the most robust way to sense and localize a robot.

The fusion of visual and inertial cues has become common practice for robotic navigation due to the complementary nature of the two sensing modalities. Visual-inertial (VI) sensors designed specifically for this purpose mount the cameras and IMU on the same board. This alleviates calibration and synchronization challenges to enable tight integration of visual measurements with readings from the IMU.

VI-localization using visual features on the ceiling. Green points show stored map features; yellow points show current matched features.