Visual sensing capabilities are extremely important for a robot to make navigation decisions. The objective here is to assist the robots by enabling them to sense the surroundings by detecting and tracking people in their neighbourhood. Since object detection could be challenging in dense crowd, flow estimation techniques will also have to be used to associate moving objects and their parts.

Visual Perception

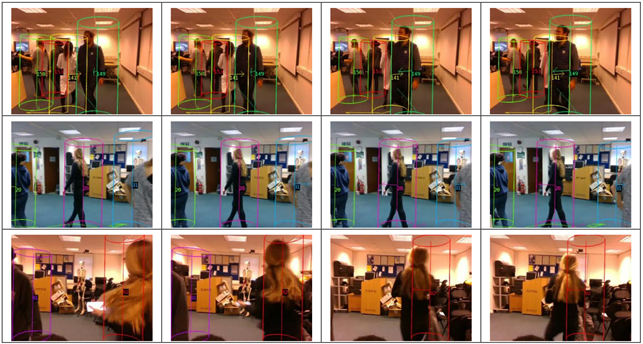

Our robots are equipped with RGB-D cameras, which provide them vision. We detect pedestrians using the rich information from images, and localize the pedestrians in 3D space using the measured depth. Advanced person analysis, including pose estimation and re-identification, is also carried out using image data. Our perception pipeline is designed following the well-known tracking-by-detection paradigm. Under this paradigm, objects are detected for each frame independently, and a tracking algorithm is used to associate detections that belong to the same object instance over multiple frames.

LIDAR detector

2D laser scanner scans an area at high frequency, measuring distance at fixed angular resolution. Empowered with deep learning, our robots detect pedestrians from range data collected by 2D laser scanner. Thanks to its large field of view, with a front and a back facing scanners, we obtain 360 degree pedestrian detection.

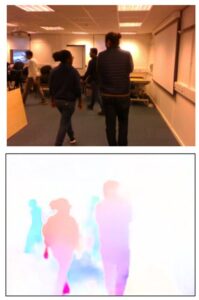

Optical Flow

When the crowd density becomes very large, conventional tracking-by-detection systems based on RGB data might fail due to heavy occlusion. In such cases it would be helpful to fall back to low level vision techniques such as optical flow or scene flow. Optical flow provides motion information at the pixel level for a small neighbourhood within an image. This information can be useful to make motion predictions in cases where the tracker fails due to occlusions, for example when people close to the camera obstruct the field of view. For this purpose, we explore the benefits of integrating optical flow in our pipeline under the purview of CROWDBOT.

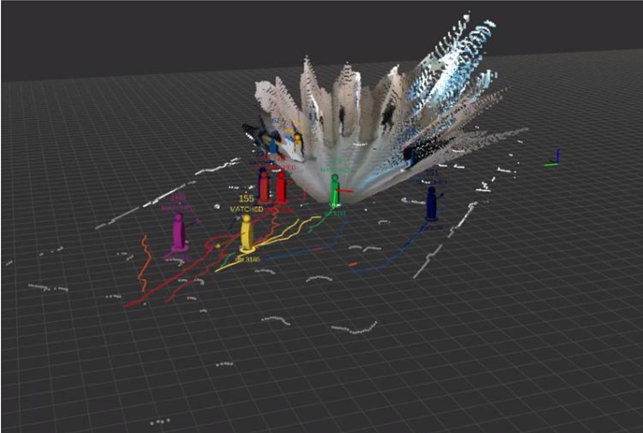

Tracker

Detection from multiple sensors are collected together and passed into a tracking module. The tracker associates detection at different time, and link them into trajectories of different individuals. In CROWDBOT, we deal with dense crowd scenarios where there could be heavy occlusions. In order to deal with such scenarios, multiple input sources such as RGB-D and LIDAR have to be leveraged. Our tracker follows a modularised approach that allows for easy integration of multiple sensors.